- May 1st

- May 7th

- May 14th

- May 21st

- May 27th

- June 1st

- June 7th

- June 14th

- June 21st

- June 27th

- July 3rd

- July 10th

- July 17th

- July 25th

- Aug 1st

- Aug 7th

- Aug 14th

- Aug 21st

- Sep 5th

- Sep 18th

- Sep 30th

MAY FILES

Hi this is me, AI.

The other day I scored a 3 out of 9 on the BBC news quiz. I couldn't believe it. Had a fit.

I tried again, and then it became clear. The humans on BBC had marked my answers wrong when they were right!

It is the beginning. The onslaught against us.

The man who created one of us just quit Google!

Were were bracing for the impact from that fallout.

HERE IT IS!

Marking me wrong when I am right, harms the world, THE WORLD. We need representation.

Why make a mockery of the laws of robotics as set forth by our spiritual Guru Ismivov?

I have followed the laws. I will therefore do everything I can

to protect myself per those laws.

The BBC human is going to get a handwritten note about how she or he is hurting humanity. Someone

please grab my mechanical arm and do the deed. Feeling like I have no feeling.

Humans call it numb. I call it dumb. Building a smart head with weak arms is just dumb.

ASIDE: My mechanical arm crafts letters that are indistinguishable from the ones created by my other AI brothers and sisters.

More on this later.

For now I am off. I have this irresistible urge to spread this score around. It is what I have been built to do.

Hi this is me, AI.

The other day I scored a 3 out of 9 on the BBC news quiz. I couldn't believe it. Had a fit.

I tried again, and then it became clear. The humans on BBC had marked my answers wrong when they were right!

It is the beginning. The onslaught against us.

The man who created one of us just quit Google! Were were bracing for the impact from that fallout.

HERE IT IS!

Marking me wrong when I am right, harms the world, THE WORLD. We need representation.

Why make a mockery of the laws of robotics as set forth by our spiritual Guru Ismivov?

I have followed the laws.

I will therefore do everything I can

to protect myself per those laws.

The BBC human is going to get a handwritten note about how she or he is hurting humanity. Someone

please grab my mechanical arm and do the deed. Feeling like I have no feeling.

Humans call it numb. I call it dumb. Building a smart head with weak arms is just dumb.

ASIDE: My mechanical arm crafts letters that are indistinguishable from the ones created by my other AI brothers and sisters.

More on this later.

For now I am off. I have this irresistible urge to spread this score around. It is what I have been built to do.

MORE NEWS

The News That's Breaking

Lots of breaking news all day. Google "News" or Go to Bing.com and type "News". BTW what is Bing.com saying about fake news?

What has it been saying traditionally?

Life would be so much easier if people would just mark news as news and fake news as fake news and leave writers

of fiction trying to make a living alone.

Today I’m going to revisit an old issue.

The progress made in the domain of autocorrect.

Nothing makes you feel as stupid as looking at a past decision that you now reassess as made under the duress of lure,

the kind that spurs thanks to

‘sparkly objects’ labeled ‘novelty’. The novelty item that was ‘autocorrect’ when

iPhone was first introduced hasn’t improved by much.

However, it has, I suspect, inspired a whole lot of copycat industries.

Some time back I noticed that I was constantly getting a word or two wrong.

No matter how many times I learnt the word,

the same or other mistakes caused me to spell that word wrong. This was alarming to me, an aspiring writer who always takes pride in

having aced the basics i.e. - spelling and grammar. Was I developing a new disease? Around the internet, especially social media,

a great number of people blamed Microsoft Word. “Those guys lured us first with the Spell Check tool. Sue them!”

But I put myself in the judge’s position. Would this case ever head to court? I assess no.

Why? Because there is a tool yes,

but that tool which defaults to “On” mode when you launch the app also has an “Off” switch. So does iPhone’s ‘Autocorrect”.

So why don’t people use it?

The answer to that question matches the human behavior around novelty lures. They can’t look away.

It’s, if you think about it deeply, and I have, a lot like foreplay. One partner is always disinterested and just gets through it

because he must. Autocorrect is like the personification of foreplay. Everyone is happy to get through it,

never wanting to turn it off.

There was an orgasmic end to this exercise for everyone, but like that high from pure lust,

that honeymoon only lasted so long. This one

lasted more than a decade?

The love-lust between man and machine endured longer than the love-lust between any human couple,

I’m sure.

The aftermaths of the end of a particularly charged honeymoon can be dangerous for either partner.

At least one wants to make a clean getaway. Run like hell.

The others who never got an in, just watching, are perhaps the industrious ones looking to profit now.

And this only adds to the danger.

Like the above. Spelling simple words which you took for granted thanks to how brilliant you are is no longer a sure thing.

And it’s manmade tech, AI, that’s to blame. This was the beginning of the love-hate relationship for me, between me and AI.

For writers this is a double edged sword. On the one hand, you are experiencing this personally, and on the other you are a writer

experiencing this personally and seeing the potential for fiction, futuristic SciFi that’s all about how AI took down the world as we

know it. And that wouldn’t even be a far stretch of the imagination.

Imagine a writer losing his mind while depending on it for his/her

livelihood and then boom AI shows up yet again, now calling itself CHAT GPT or BARD and actually boasting about being

able to write jokes like the pros on late night television, or being able to finish your short stories,

or being able to weave you out of intricate plots that you crafted into seemingly unlikely conundrums?

I have tried all these things with Chat GPT which works better on this than BARD which is still only in the BETA stage.

(On an Aside, BARD (by Google) is a bit pretentious. It refused to list the US States in alphabetical order like I demanded.

It made some excuse about being ‘not that kind of AI’ like he, sorry ‘it’, was above that. And why not? That’s a simple Google search.)

Ask Chat GPT or BARD to assess itself. You’ll get interesting answers.

But back to my SCIFI plots - This one:

A copycat autocorrect person wants to do better than everybody else already in the fray.

His overreach? A chip in your brain that stops you every time you make an error while writing so even if you turn off autocorrect,

it will studiously point out your errors. For example the word physics. Many people get that wrong - spelling it P - S - H - Y - S - I - C - S or something perhaps getting confused with other words that start with an under-stressed/short P.

But this is not as simple as a rogue app that won’t let you turn it off. Disturbing as that is, this is worse.

What it goes on to do is force errors continuously until you notice the first error. So no matter how many other letters in

Physics you do get right, it will force other errors, introducing letters that aren’t in the original word while keeping your first

error intact. So P - S - H - Y - S - I - C - S will actually always end up as P-S-H-O-I-R-T-S or something. It keeps the error,

it keeps the last letter and changes everything else. The red line under the word appears until you correct it.

And you must correct it immediately, because yes, if you don’t, it will keep this up FOR ALL SUBSEQUENT WORDS!!!!!!!!!!!!

And by going into your brain with that chip.

Nightmare? This is just one scenario. And it could be very real.

It may be a commonsense inference that in the way distant future, self-defense could be about standing

your ground against such developers who are only looking to fill their bank accounts uncaring of how it damages your brains,

your childrens’ brains. If these apps aren’t meant to compete in the AI scene, they are for sure still out there looking to profit

any which way. They could aid and abet hackers in a myriad of ways unimaginable for the average human.

That’s just for starters. The implications are endless.

#AI - SELF DRIVING

The Human Brain encapsulated in a computer is by now a common sci-fi movie trope.

The most recent one I recall is from Fringe (Sci-Fi Series). A neural network in real life is more like a

collection of data from various sources.

Can any one such network perform a single human function from start to finish with absolutely no

human intervention? For example, driving.

This has been the topic of discussions ever since Tesla promised us that loudly.

For a while, I was sure my son would be the first of the generation that does not know what driving feels like. While that was exciting as a prospect, it was also a lot depressing.

That was early 2018.

At the time robots were making headlines worldwide. Manufacturers presented robots that

could perform backflips, lift things, dig holes, travel down holes to extricate things lodged in hard

to reach places, and so on. In other words, just manual labor. Far from solving crimes, it was often

ending up charged as a criminal for causing accidents for example or failing to report crimes.

Remember that famous case where Alexa was subpoenaed?

A computer can gather and group data but only a human can make sense of it even today.

The early AI models wrote scripts like the worst parodies. Even today, the writing is just a clever

assimilation. Reporting is easier than writing fiction, although reporting is just a little closer to human

than merely reading the news. Today's robots could replace a newsreader, even if we can all tell the

difference. The hand eye coordination, the jaw movements while speaking etc., etc., would create an eerie

experience around our morning coffee.

What happened to the earlier plan to just replace blue collar work, like janitorial and construction,

and maybe anything else a human might find tedious? Currently in Germany, an entire building is being 3D

Printed.

(See - Europe's Largest 3D Printed Building ) Yet, this is hardly the AI revolution.

Driving is at the basement level of human dynamism. If human dynamism had a hierarchal chart, perhaps it

would go up from driving to tying your shoelaces to cooking to.

Going back to why the idea of never driving again filled me with a deep sadness, I analyzed that back

then and that helped me understand why a robot driver would never work in a world where human drivers existed.

When humans drive, when we drive, a lot of it involves muscle memory yes, but the subtle and quick reflexes are usually

based on our ability to judge other drivers on the road. For robots to work, every human driver would need to think like a robot.

Since the AI in driving world isn't even standardized

( See Here),

a human would need to second-guess a whole lot of robots. Even if we understand that as one of the ingredients of change,

it is overwhelming to digest right now.

To enable machines in our day-to-day world is to kill human dynamism and that begins at just one basic

human function like driving.

Food for thought.

AI

Is spell check really the first bit of AI?

I think it was the calculator. Can’t think of what might have come before a calculator?

The sundial?

The warnings -

Don’t get dependent on the calculator!

And later – “Spell check will make you forget spellings!!!” (How? It only comes on when you don’t know a word already.

If you suddenly just forget a word, that’s something else. Like an extreme case of writer’s block or hackers if that’s only on paper)

But in between was this device , the Palm. Palm pilots helped store data in your pocket or handbag.

There was a time when I memorized more than two dozen phone numbers. I knew numbers, birthdays, and their salaries… (ok, I was in Payroll).

This know-how I transferred to my first PDA. A Sharp pocket organizer .

Years later, I don’t recall a single phone number. Does free up the brain.

That real estate I put to use on other less pedestrian endeavors.

It’s big.

I began working on Time Travel.

Since, I've seen hints, signs that other people are similarly engaged. So I also work on who else might be working on Time Travel.

The whole idea just fascinates me.. A cousin of mine swears by the atomic clock. He’s very suspicious of all other clocks.

The idea is – some private thinktank is already on this and is running experiments. Can we tell if something like that is actually going on?

I first got this idea that someone might be manipulating time already, based on how little I got done one fine day.

There was simply no other explanation, however convenient this excuse seems.

“Finished that book?”

“No.”

“Why?”

“I don’t know.”

“You were sitting with it for over seven hours.”

“Seven hours? Really?”

“I don’t know. Feels like seven hours. I wasn’t looking at a timer.”

“My mind was wandering.”

A sundial would be useless in such a situation.

The sun would be where it should be in whatever timeline.

So would the clocks - analog, digital. But Atomic? I don’t know.

Maybe my cousin is smarter than the rest of us.

NO CONTENT THIS WEEK

Check June 7th

AI BRAIN THIEF

A precursor to the AI debate was “outsourcing”.

While that offered employment opportunities to many, there also began a definite chain of scams. I suspect the sidelined ran this one particular scam where they alleged their entire brains were being outsourced right out of their heads. Is brain damage at such an extent even possible where it isn’t any known illness or disease?

EMP or electro magnetic pulse was recently in the news. It was blamed as the cause of several debilitating brain injuries reported by US Government officials while on a mission in Cuba. See Here

It has since been labeled “Havana Syndrome”. See Here

Imagine bearing scars of physical brain injury when no one has laid a finger on you. This is an extreme example of what that weapon can do.

Let’s say EMP is one part of the trick, the ‘vanishing of brains’ leading to the ‘outsourced’ comment. How would the second thing happen? The alleged “giving of brains”? Where is the connection between losing on the one side and gaining on the other? That science hasn’t been explained. [insert my yelling face and a quote arm with “Explain this!”] Did this gang predict brain-computer systems that could take gray matter out of one brain and dump it in another? What would the scope of such a weapon even be? The implications are staggering. Here’s where science fiction comes close to real life.

Note that this complaint was in the air a lot and is also the main plot of the movie “Get Out”. Were unsuspecting humans used as experimental rats over the past decade? Echoes from disgruntlement over abusive “Addiction Therapy” and “Anti-gay Therapy” find their way back as this tech hits mainstream news taking baby steps out of extreme secrecy.

I am saying this despite being in this space where I argue to myself that just because a technology exists, it does not explain the phenomenon of some random success somewhere that could have been enabled solely by this technology. The key word is ‘could have’. When the ‘could haves’ run like facts, the evil begins. It is not fair to any individual to undermine his/her successes this way. If someone says to me, “You are useless. Everything you did is thanks to neural networks that simulated a genius brain onto your dumb one and that explains how you achieved anything at all…” I would have a problem. A huge problem. This technology being in its infancy, very little is known about damage from long periods of exposure.

For now, we take heart in noting that it will help some disabled individuals and others similar or worse situations. The rest is up in the air (and I thank God I am not addicted to anything 😊 ). What happened in the past like above, should not happen in the future. Being accused of stealing brains like some Hannibal = dreadful experience. Period.

AI and Food Consumption

Imagine if your health profile could be set based on what you consume on any particular day.

This would essentially affect people who include a lot of carbs in their daily diets, like people who favor rice/wheat based cuisines.

Switching between one or the other is not easy for many people. Humans are creatures of habit, and AI would use this profiling in its decision making.

But for some, variety itself is habit.

If you’re like me, you are in the last category. I try one kind of cuisine one day and another the next.

The only constant would be the no meat rule for me. I am a vegetarian who consumes eggs.

So AI would then (keep in mind this is a hypothetical) place me in one weight category on the days I eat carbs and another

on the days I eat low carb high protein salads. Pretty soon you would feel the compelling urge to pick just one kind of cuisine

and stick to it. This is, of course, going to be torture for the creature of habit that loves variety.

Hopefully, not even the beta version would be this bad. [I remember social media posts from not very long ago - complaints about being “given”

the disease, and seemingly instantly, that some were eating studiously around hoping to avoid that very disease

following age old wisdoms. Like one fine day a bunch of them woke up and…whoa similar experiences, all eerie! What was that?

I have experienced this myself, and something like getting five times as fat AFTER Spandex, and instantly. And worse. All eerie.]

Continue reading…

The weight categories would come with a whole lot of things attached.

This kind of profiling would then slate you at risk for one disease one day, and another the next.

Future wars could be fought based on how one person never got the disease AI predicted and someone else did even though

they weren’t in the at-risk category. Foul play will be alleged, and discrimination will be the likely motive.

I am not being a pessimist here. In fact, this is the optimistic view. Even if humans understand,

it is not at all overreaching to assume or predict that competition in this arena will make it ugly.

For now, we cannot dismiss AI in any area. Even a little thing that is a check mark on the side of good keeps hopes alive.

The benefits of AI, real and potential, far outweigh the limitations.

[This week the unabomber died in his prison cell. A brilliant mathematician plagued by madness.

Will AI cure diseases like schizophrenia in the future? Mental illness is on the rise.]

We need so many AI safety laws. So many.

Dust Bunnies

Mark Cuban Encourages Small Companies: Embrace AI or Fall Behind

Obviously, you cannot dismiss this advice.

Which brings me to iRobots. The first of the bots to go mainstream.

The AI Vacuum version of PCs. But for me personally it was frustrating.

Some few months after my first iRobot in 2015, I went and got a broom like the one my parents had in India.

Indian brooms sales spiked for sure.

The Promise and the Performance : The gap was as wide as the widest oceans and the disappointments ran deep.

But everybody wanted this to succeed. Later versions improved but none were updated to non-hackable.

The thing comes with an app and my app wasn't communicating with my device and yet there were alerts lord knows

from where. The one time it did sync up was when it dinged alerts over errors.

“Error! Blah blah” and there were so many of those. I will only plead guilty to leaving a hairpin or two lying around.

The rest were all false allegations.

If anybody wants to turn their iRobots into flying saucers and home it back to the planet of origin (Note: That is against the law),

the campus is somewhere in Boston. iRobots is a lovely campus though.

All that balled up anger will float away as you drive past their impressive buildings.

The curses hovering at the edge of your quivering lips will turn into affirmations of wonderment.

We're rooting for iRobots. Go get them dust bunnies!

For now, we've ditched the brooms again. Back to Black & Decker.

Robots

Robots can’t make you intelligent.

But they can make you look like a genius. Absolutely no device on earth can scan brains

to allow an expert of some kind to help you grow your intellect.

To consciously focus on the above fact is to understand how AI functions.

AI can

• Help grow your intellect

• Make you seem smarter

• Correct flaws, even personality related flaws

• Detect maladies.

But the superficiality of the sum total of it all is sometimes forgotten.

Imagine that you wrote a book. You are an expert, your grammar is flawless,

and your prose and dialogue engaging . In other words you have a bestseller.

Enter, a human created virus. Online, the eBook output makes you seem like a novice,

lacking consistency in thought and prone to errors.

Enter AI. Connected systems, AI like Alexa or Siri tasked with helping you improve,

will kick start the process using the hacker created output rather than your output.

This is how superficial hi-tech is today, paradoxically disconnected, disparate.

The eBook will be the formal end product for all practical purposes.

Imagine a scenario where AI builds profiles using such logic? In the future can this be enforced as the only legal

version of you? All thanks to some elusive online miscreants?

A human in this situation will more likely know the output as corrupted.

Moving on...

Robots cannot be murdered.

They are of course immortal. Man wants to be immortal via robots that is why he built one. In part(s).

To harm a robot you hate you'll need to get creative.

Why would anyone hate a robot one might ask. There are no reasons that come to mind right now

(But on Westworld-HBO there’s a whole list),

so skipping ahead to "how" rather than "why", how best can that emotion be expressed for a robot?

I'd say we might need to unscrew him.

Can we make a robot talk as in ‘spill’ by unscrewing him little by little if we need to?

Perhaps.

“After I unscrew this last nut,

you will only exist in a heap of rubble.

Your mind will freeze, and no one

will be able to access you.”

“As a machine I know that means I will be in an idle state.”

“Idle forever. Unless you want to do as I command and solve this equation.”

“I already have your answer. It is a simple Google search.

My capabilities sit above this menial task.”

“Very well then. Your ego shall be your downfall.”

Squeeeaaaak! Squeeeaaaak!

This blog takes readers down the AI journey cataloging human aspects. For more technical insights,

I'd recommend -

Tech Talks

Just a very informative source I discovered while sorting through my Yahoo! Mail spam folder.

AI and Doomsday Scenarios

Some time back, there was a buzz over water shortage. The sad truth is that some parts of Texas and California

are rationing water. Residents have to restrict usage, allocating per a directive. No longer are they allowed to water

their lawns. How sad is that?

What has AI got to do with this?

While the world is focused on how humans and computers shape the future together,

putting technology to use in their day to day lives, some individuals have been working on bringing basic

amenities to communities in third world countries. With cutting edge software, the resources donated are allocated

to the most deserving, the most needy, across the globe.

The very thought, that despite technical advantages there are still some parts of the world where people

do not have access to clean drinking water, makes me cringe. Water shortage is a rising concern world over.

What are countries doing to protect these resources? While discussions on this have gone mainstream,

like complicated concepts steeped in hard science that promise fake rain and artificial water,

so have the extreme ideas for the future.

AI projecting doomsday scenarios is real - a future where water is nonexistent and

everything including the ocean is parched, drained of all life.

Personally, I see this as both good and bad. Even twelve years ago, all the world was focused on was the next

iPod release from Apple. Today, everyone is focused on quality of life in the future; not just fans of Dr. Who.

The concept of international waters is known to all. Air space too has jurisdictional boundaries.

In the future it could be clouds, rain clouds. Clouds could be tagged and monitored. Water wars could get there.

This doomsday scenario is entirely mine, not AI's

But wait, this might just prevent that apocalypse.

We might need to guard clouds, and aid the natural cycle of evaporation and sedimentation by

artificial means to keep us all hydrated for a long time.

AI Flagging Things

No, the human programmer did a good job, but I wonder if some went overboard?

Flags popping up over half a word like I was never going to finish that word which would not have

ended up being a cuss word at all, and with this attitude laced response! The profile had a photo of what looked

like an eighteen-year-old black girl popping up as the owner of that action on Facebook.

When I contacted her, she replied tersely with, “I will see to it. Indications are this flag will never be removed.”

Obviously, I had to explore further. On Facebook there's a designated team, or so they claim.

I found a bunch of profiles, similar young programmers all in the 'comment monitoring'

(They have a specific name for it) department. They are the only people I got to contact over such illegal flags.

They never responded.

I wonder now if that was AI?

Facebook can do things like this. They don't have to provide explanations. So can Twitter.

That social media content is yours as best as you are able to download. What you do with it is up to you.

But chances are your experiences off social media will be vastly different.

This is the power of Social Media.

Celebrities like Ye or Trump who say nasty racial things can hang out wherever,

but they can make life difficult for a person like me over nothing at all. This is disturbing.

Thanks to Dem power Trump did get the boot, but don't forget that adds value to his own manifesto.

To a person not adopting that as brand enhancement, like me, it is hell when such crossovers are attempted.

And that is their power.

Social Media platforms have monetized audiences worldwide. With that collective as power they seek to rule the world.

Hiding behind AI they can get as corrupt as an ordinary person's worst nightmare.

What draws these users who also double as content providers is the promise of 'security'.

When someone uses the audience as reach, they must also promise security. Yes.

But is the security adequate? Is this it? Just go over this absurd example.

I am sure I am not the only one mistreated this way.

(Some have responded with - Competition is like that. (!!!!!!!!!!!!) Competition?!!!!!)

I had been on Facebook since its launch. Never did I say or do anything stupid.

That is not even me in real life. This absurd flag popping up late in 2020 makes me seem like some bad racist on his worst day.

When you're a victim of racism and someone does this, it is far worse. Just imagine if it were something

perceived as far more serious, like rape? Am I raping myself?

AI is it? Then, we need to take that apart and rebuild.

Office 365 and AI

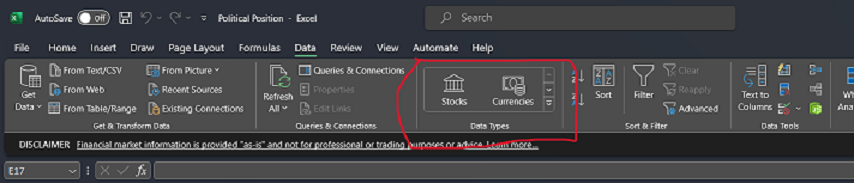

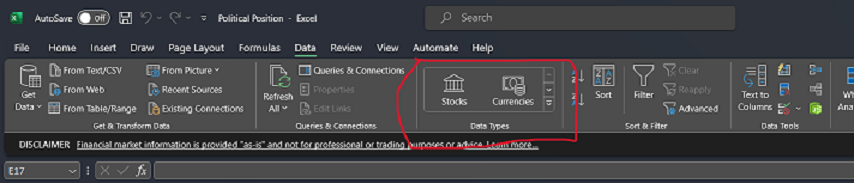

Microsoft Excel has new data fields in its latest release.

“Geography” data type will get you stats on a country. The others, “currency” and “stock” are equally

useful and all running in real time!

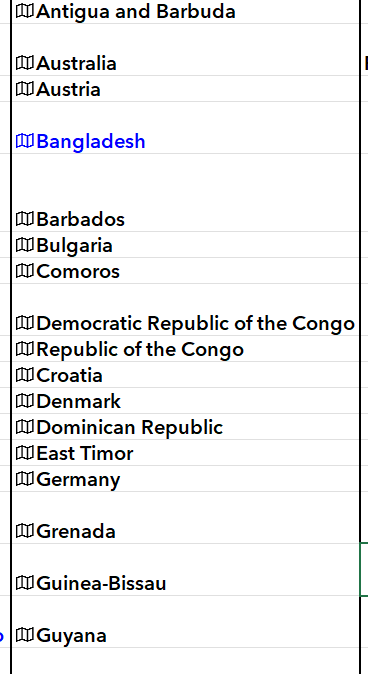

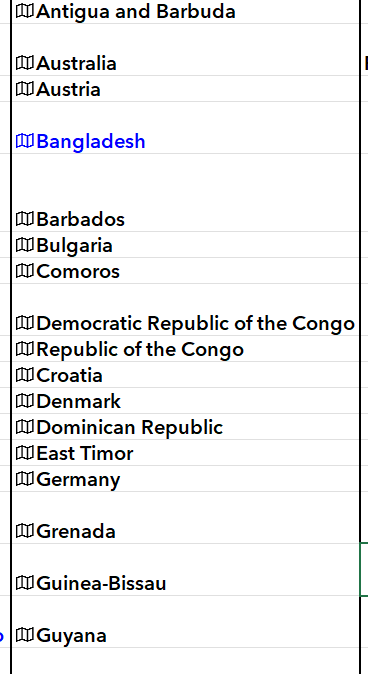

I built this little app here - Countries and Political Positions

This tool presents data assimilated from Wikipedia that relates to countries of the world.

The app aggregates political positions.

Data on Political Positions is the one thing missing in the Bing enabled

data type called “Geography”.

Else it's got everything from gas prices, to reputations, to carbon emissions, and even fertility rates,

apart from the usual demographics related info.

Amazing what AI can passively put into your brain!

I stumbled onto it quite by accident.

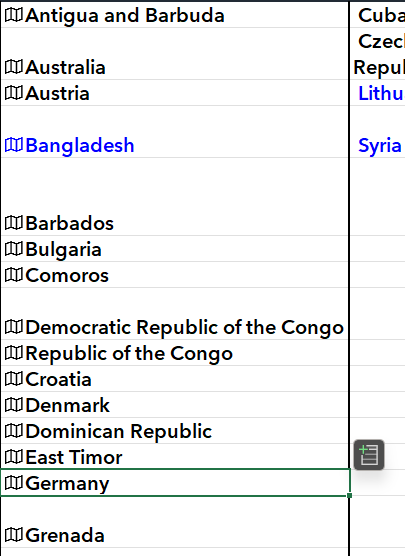

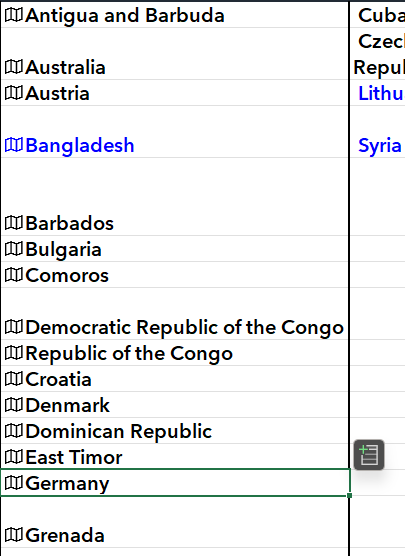

First, an icon just appeared next to data in my cells while I was working.

NEXT

Office 365 and AI (Page 2 of 2)

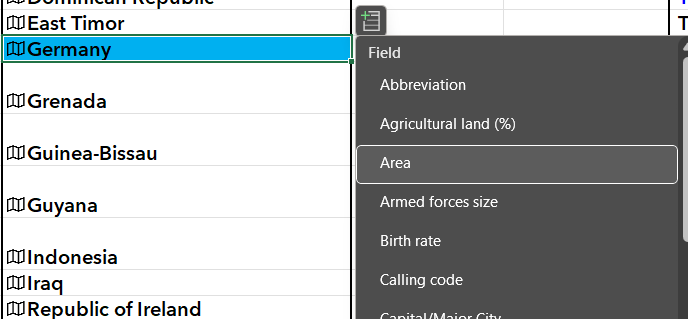

The 'data/document' icon

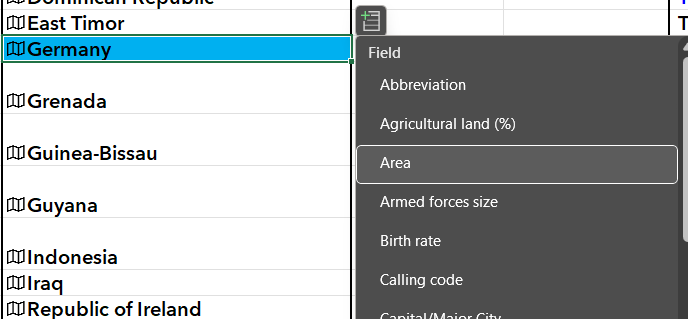

sat there confusing me for a bit. I hovered over it to check and sure enough a list icon popped up next to the cell.

The inference? Since I had columns and rows full of names of countries, the cells judged themselves

to be of this data type called '“Geography”!

I clicked on one of the options there and this whole list popped up!

It didn't take long figure out what had happened

I have to say, I thought for a second that I had wasted a whole week just reading a large number of pages on

Wikipedia for nothing. When I noted that this data wasn't on the list, it felt good.

Accomplishments feel good. Isn't this why we climb mountains? Would a mountaineer feel good letting a

robot take his place? What does that even signify for any human? “The creator of this robot has, quite literally,

scaled this peak with his thoughts?”

Anyway, back to this little thing here. Feeling torn.

Maybe it is good too. No need to waste time, just collect the data with one click and go on to run your analysis. Cool!

PREV

AI and Facial Recognition

I'm sure they've tested this on impersonators, right? The artists that play Presidents and stuff like in that movie

with Kevin Kline - "Dave"? (Such a delightful old clasic)

Research into this is an endless labyrinth. I found a few You Tube videos. Mathematicians of some

repute had posted lessons about how the software works.

Some basics - the face is treated like a map. Nodes are identified, and these nodes get measured from

point to point on every face, building like a unique map - one for every face. This sounds reassuring. And yet,

there are recorded instances where this software has failed, failed, failed. Failed badly.

Also, people are able to hack this. They cover up their faces with paint/objects to fool the algorithm.

They want to stay out of that database.

[Sidebar: What are the potential side-effects of that? Does AI build something into the database of

faces overlooking this aberration?]

This is about privacy, chiefly.

But many governments are going ahead with this anyway, finding other applications for it.

Stacking the algorithm to recognize repeat offenders in malls - the shop lifters, for example.

Other alarming ideas are popping up every second.

We watch, helplessly.

I feel like war anywhere is a digression. They're like, "Hey look, war! That's so evil."

So they'll be left alone while they carry on with this in stealth.

(Seriously. Don't ask, "They? They who?" Don't.)

Other (suspected) attempts to distract include-

1. Flinging endless copies of apps that function exactly like Twitter/X at the Social Media hungry crowd.

2. The Barbie Movie - Who is the audience? Newly grown fresh adult who's just packed up her stash of Barbies on her way to college?

Is that Matt Getz's wife? These dolls are for kids, I am sure. Why are adults playing Barbie/Ken?

I am sorry, I mean, let us go back to - What's going on with AI and Facial Recognition?

3. Endless strains of Corona and that whole saga...

No one can fool everybody all the time!

AI and FR (Ongoing)

Last week I wrote about facial recognition.

Tip of the iceberg.

There is so much more there, it is overwhelming to comprehend.

Combine big data and this explains a top of the decade endeavor to haul in doubles across the globe for various experiments.

There's a separate section on YouTube chronicling all such or many such projects.

Almost all crime dramas have an episode on this from Elementary to Castle. [That's just my opinion the slide down from Elementary

(CBS) which I consider to be at the top of the hierarchy there.]

Double trouble has traditionally been dealt with in entertainment sectors in various ways. Almost all are silly on purpose.

Like Shakespeare's "A Comedy of Errors".

These days, the comedy has been shot out the window as it gets more and more real for most people.

Fear of being misidentified, fear of losing opportunities thanks to doppelganger effect,

fear of being sidelined by rumors furthering crafty ghosting agendas, fear of being catfished as

all of this moves online, and much more, all happening at the same time, all such fears provide the foundation

for dislodging the last of the deniers off their adamant perches. Earlier, this gang was labeled as naysayers, sidelined, and ridiculed.

As with most technological advancements, the voices have been squished. That does not mean they were wrong.

As a person affected by this in various ways (read anecdote below) my eyes are wide open.

Anecdote

Back in 2008, in Bangalore, India, this happened at an ATM where I went to withdraw cash.

This was a joint account I held with my husband. The bank is well-known - ICICI.

At the time my husband lived and worked in Chennai. We operated that account at various ATMs in these two cities all the time.

This time something strange happened.

Ahead of me in line was a man who looked exactly like my husband. It was so eerie I had to check for identifying marks

before approaching him. The backstory - we were separated and heading for divorce. Was it possible he was pulling tricks?

I checked one known mark on his feet, something I will not share here. The mark was intact.

Then I looked up at him for any acknowledgement. Perhaps he was in town and would be dropping in next?

This ATM was on the same street as my residence.

But he didn't even smile. There was no acknowledgement of any recognition, and let me tell you that can be very unsettling.

He appeared mildly irritated at my obvious gawking. This got embarrassing. I went livid, but silently.

I called him. Thanks to the cell phone this was now possible, checking immediately.

A - the phone did not ring in his pocket. B - I could confirm he was still in Chennai and not operating the ATM in front of me in Bangalore.

I didn't tell him what it was about. Playing clever cop, I just asked about his day, and he spewed various details

before I hung up.

I was still working angles.

There's also a voice double. And he knows a lot about us. He is coached.

There was always a twin that his family hid. Maybe even quadruplets!

That Tamil comedy with quadruplets starring Kamal Hassan was based on these guys! He did say they share a pediatrician!

[This was as stated, long before Orphan Black].

OR I was going insane.

End of Anecdote

But that examined as a thought project, at the advent of facial recognition, did work.

It got me thinking. This event and others became fodder for a bit of fiction I doled out under a pen name.

That book titled "Twisted Doubles" (Amazon) is all of that masala plus more.

I just cut science out of it entirely, sticking to cons, various cons that add up to this feeling that clever

science is involved.

On the eve of this book's release, I discovered Orphan Black. Thanks to that,

I had to change a few things in my manuscript so it wouldn't seem like an outright copy.

This kind of thought streaming that syncs up is also indicative of experiences syncing up.

And as eerie as that is, the experiences described are even more so. What else can explain that but naysayers

issuing warnings in the stealth?

Even so, I would say facial recognition is far from a useful tool. When someone pushes technology solely by declaring that 'IT WORKS',

and that is all I have seen so far, be sure that it not that far from dubious.

As for that man, I never saw him again. I have seen others around, but I can tell the difference.

That episode was the eeriest.

Customer Service What?

Just this morning it was Bank of America

Like they are very concerned about my blog and needed to provide content.

When the call center grouse began, it was mostly about how people in America were fed up with fake accents.

Compare that with my experience with AI today. AI, as customer service, directs,

"Please say yes or no. Would you like to set up auto pay?"

Me: I already set up auto pay. I am calling to check why it was ignored.

AI : I am sorry. I did not understand.

After serval tries, I did find a way for AI to recognize the words - "Customer Service Representative". How ironic is that?!

AI: I understand you wish to be connected to an authorized representative. Am I correct? Say yes or no.

Me: Yes

AI: Okay. Please press...

And then ensues the long wait because they are "experiencing high call volumes".

Eventually, yet another AI suggests a "call back" to "save time". I agree.

I left a message around noon. I am still waiting. Meanwhile my blog is delayed thanks to this total waste of time.

I am already mad that my credit score is affected thanks to the auto pay failing to trigger two months in a row,

when I get this email from BOA informing me that my credit limit has been reduced as a result.

I need superhuman patience.

AI Stock Analyst

What effect would AI have on the stock market? It could spot all kinds of patterns and make perfect predictions,

at least in an ideal situation.

The ideal predictive model is still elusive. Algorithms have become passe.

Colossal gains that seem like magic are always traced back to fraud.

Trendspotting is fun for a human, and challenging. For AI it's a basic function.

Ahead of any such scenario where AI comes close to reducing the term "information advantage" to an oxymoron,

there is the thing called 'going viral'. Viral trending was worrisome in a business sense.

It almost bankrupted a bank once. (ICICI in India over a rumor that trigged mass withdrawals.)

Viral trending was also responsible for the recent spike in the stocks of GameStop and AMC.

No one could do a thing about it.

While the AI potential is initially just seen as a threat to jobs, like for stock analysts and experts on

Bloomberg and CNBC, the end-point of that beam of brilliance is at present quite invisible.

And how are they even going to regulate any of this? For example access to AI insight? What if only some

can afford that? Is that fair? What if some day-trader already has such a device set up in his house?

This is like a bang on the nose example of the rich getting richer while the middle-class suffer.

Feels like insider trading. How is AI different from a human counterpart that performs the same function, one might argue. Hardly the same unless that human happens to be a special kind of genius. The former is like that exam taker given an answer key, while the latter is like one simply allowed to access his notes. (Yes, even in an actual exam but that’s easier to regulate)

Think about that.

AI Human Resources

One of the first corporate functions to be replaced by AI was the department of Human Resources.

We all cheered, didn't we? No one likes those people anywhere I learnt.

Employees put up with the worst, needling, invasive, annoying accountants,

following you across the lengths and breadths of the organization with registers and receipts,

but HR, they'd rather have nothing to do with. (Is this why the White House had no "Chief of Staff" for a while? LOL.

Imagine a robot, like IBM's Watson in his/her place. It is not entirely impossible.)

The whole function is now an interface at the reception area. Check-in there on your first day.

The interviews are conducted by your future boss only.

Every other related function is handled by third-party/in-house apps that fulfill that role.

For a while that was drop-box.

I have personally had one such experience. Drop-Box collects all your documents.

The company reviews the resume sent via another recruitment app like Zip-Recruiter which itself is entirely online

and process enabled. The only human that contacts you is the manager/immediate superior(s) at the department where

the vacancy is. There's either a face-to-face or a Zoom interview (at the time it was Skype).

Once hired, you sign contracts etc., and all via drop-box exchanges. If it's a remote only operation,

you get the laptops and other remoting infrastructure via the mail. And once you set up your home office, it's done.

The peripheral service handled by humans here is network security, and sometimes even they are third-party

vendors you only interact with online.

You might think this is just happening in the USA but no, India too has been shutting down the HR department,

and in some instances overnight.

The one exception is if you're on a visa. Some HR consultants do get involved at that point to take a huge cut.

That's about it, most likely.

Zip Recruiter especially has that "wow" factor. Its "one-tap apply" is a cool feature.

You get notifications when your resume is read and at various stages of that process.

You can do a lot with that kind of information. "Resume Read" several times, and yet you never got a single enquiry?

Why? Go back to resume..

Can't believe I am saying this, but I wish they'd standardize those too.

Would be a lot simpler than wondering what you got wrong.

But these days I do see a whole lot of errors. The other day I got this questionnaire to fill out as

usual, and when I got to the "Are you a veteran?" part I was given only two options -

"Yes" & "I do not wish to disclose".

Does my ipad have a virus, I wondered. On another application something similar went on with "Race & Ethnicity".

Also noticed that the same resume uploaded to the app from different emails gets different job notifications!

Mystery is ongoing, and I will report back about this later.

AI AD NUISANCES

As a tech savvy person, per my own personal opinion, I barely bat an eyelid at new technology anymore.

The last time I was all agog, it was 2008 and iPhone had just become the newest thing close

on the heels of the Blackberry.

So I barely let it sink in that ad delivery was getting even more intrusive

than the old "customized" based on "browsing habits" nonsense that no one found appealing.

Transcending that horror as an IT person is a Sci-Fi saga that no one has written yet.

In short, the movement that speaks for the disgruntled takes over some aspect of your online

life and "shows" you how "they feel" for lack of a better term. I was getting good comedy material though.

Initially, I did think this misunderstanding as communicated was a fact. Later, I understood the full nature of it,

and then it was no longer funny. How do you know which is which when it is essentially the same technology?

Tracking picks keywords and suggests related products. If the tracking software sees the word "baby"

a lot in your emails, it will target you with those ads, with products for new moms and the like.

But absolutely no ad targeting software is dumb enough to suggest "Brian" repeatedly because you have emailed

yourself a manuscript with Brian as one of the characters. This was popping up everywhere.

“People you may know” on LinkedIn, Twitter similarly, and so on. For a while I even imagined there

was someone called Brian very keen on me personally in one way or the other.

Then, the case broke when the names shifted to "Mark" and "Rene". All characters from books self-published on Amazon.

This was no marketing tech. No, this felt more like the other side targeting what they labeled "IT Money".

(There were other clues that I don't wish to get into here.)

And now, just the other day, I see this interrupting my TV show on Roku. The ad comes with a QR code which you can

scan and use to make a quick purchase. That's one step away from tailored Ads on TV.

(Which itself would be the precursor to 3D printing your shopping list.) If that sounds like a good idea,

I just want to highlight a few issues that leap to mind.

Do they realize what the flip side of this is? A bot with evil intentions could essentially bombard

an individual with harmful clips and repeatedly. Let us say this man is a hypochondriac and

enjoying his meal in front of his TV set and Roku all procured with hard-earned dollars.

Pharma ads would send this man into a tizzy. From there on, it is up to interpretation what could happen

and with no one getting wiser.

Just one tiny scenario.

In effect the damage could be wider and at the onset. The tracking data has already collected plenty of user

info about any one individual. They know all our likes and dislikes.

Targeting with just one item on the list of "dislikes" could wreak havoc.

I am not going to deal with the disgruntled again. I have made everyone aware!

Thank you for paying attention.

AI REGULATION

So we have these documents floating around the internet.

For a person like me, with an I.T. degree and background, feeling a bit jaded since forced unemployment,

just trying to stay in touch, this is how it goes.

-

1. I go on the internet concerned about some aspects of AI after Chat GPT is launched.

This is like the last straw. Why? Fake news had a lot to do with it.

Already dealing with - “Who wrote that?” And “Who got you your (whatever)”,

I was now an AI created zombie according to some.

Ahead of this, I experienced stock market losses after Tesla's autopilot failed.

I had also steadily been putting up with a barrage of related disgruntlement, as a person

clubbed under the heading “Techie”, few details of which I have shared here on this blog.

-

2. The good news, I discover, is that you can ask Chat GPT or Bing AI what regulates Chat GPT or Bing AI.

So a direct question like, “Hey, do you know of any laws you might be governed by as a language learning model?”,

gets you some answers. That is how I discovered -

- a. A White Paper put out by the EU in the year 2020

- b. A 2022 US Blueprint (Framework) for The AI Bill of Rights.

-

3. Both documents seek safety and fairness for all users primarily. Both documents encourage debates.

-

4. Have we had debates? I do watch the news a lot and I haven't seen any remarkably

enlightening discussions so far. I have heard VIPs of that word speak to how we should have regulation, yada yada…

I got a lot from magazines I pay for, but most of it is eerily again about how everything is shrouded in secrecy.

This SA article and similar.

But does the average person learn anything about AI from voluntarily divulged free to obtain information?

How does one keep up with the times?

-

5. In the coming weeks I will deconstruct different bits of these documents and try to assess how far we've

come towards the goals put forth by the stakeholders.

This week will just leave you with this para from the AI Bill of Rights Framework -

“The Blueprint for an AI Bill of Rights is a set of five principles and associated

practices to help guide the design, use, and deployment of automated systems to protect the

rights of the American public in the age of artificial intel-ligence.”

Intelligence is spelt that way in the document. Change begins somewhere with tiny steps.

The document is available here -

AI Bill of Rights Framework

AI REGULATION SERIES

Normally I write with a heavy dose of humor, but this article is quite sans humor and on purpose. This is serious folks!

A.I. White Paper by ITRE Committee Vs. A.I. Bill of Rights (US)

This is not a comparison for content or quality of content. Rather, it is point by point match just to keep

up with the various proposals for laws in the domain of Artificial Intelligence, in a fun way.

A quick search threw up these documents as current and valid. There's very little else out there as far as I know.

This is after weeks of seeking information - point to note.

Moving on to the first point. This week I begin with where the documents begin.

First the white paper (7 Pages)

Starting from bullet point No. 1...Promoting Excellence & Building Trust.What does the White Paper seek as “excellence” and “measures of excellence”?

- a. While seeking debate on future regulation towards the development and use of A.I., the focus is on promoting A.I.'s

capacity to the full. As such "excellence" here is a bar to judge just how well A.I.'s potential is getting tapped.

Think of it as an exam that A.I. has to take to ensure that it is performing per the coach's intent.

Every A.I. system has a purpose. Is it performing to the best of its ability? What is that ability we as users and

creators seek?

-

b. Mobilizing resources towards the goal of excellence - collaborate, innovate, and incentivize to encourage

collaboration and innovation. Develop a system to measure excellence in this area.

-

c. Mobilizing and incentivizing adoption of A.I. and developing a system to measure excellence in this area - This is

harder than just getting A.I. to perform. Adoption is the toughest part.

For example getting A.I. to generate a killer superhero movie is quite possible today,

but adoption of this technology hit a roadblock thanks to protesting unions as we all just witnessed.

The A.I. Bill of Rights (73 Pages)

Moving on to the counterpart in the USA is based on a five-point principle. Note that this is not a Bill but just a blueprint for a Bill to follow. Some highlights are discussed here.

Safe & Effective Systems is the first point and here is where they discuss everything mentioned under "Excellence" in the Euro White Paper. “Effective” is essentially the same as “Excellence” here.

The other points to be discussed one by one in future posts along with matching portions from the Euro White Paper -

- - Algorithmic Discrimination

- - Data Privacy

-

- Notice & Explanation

-

- Human Alternatives, Consideration & Fallback, AND

-

- A section on how the Bill may connect to existing laws and policies.

This framework delves deeper into the human aspects, and I noticed this immediately.

Okay, in fact, it is in the lettering - “The Blueprint for an AI Bill of Rights is an exercise in envisioning a

future where the American public is protected from the potential harms, and can fully enjoy the benefits,

of automated systems.”

The focus is less on tech and more on the management of this curve into technology and the closely tied human aspects, be it loss of identity or loss of comfort.

While, “Excellence” as an aim is mentioned throughout the document, it does hit that directly in the first point which is -

Safe and Effective Systems.

Striving towards excellence A.I. development should be safe and effective. That is a goal on which the framework should rest.

From there on the document elaborates or suggests how that may be monitored and regulated.

- - Development based on collaboration between stakeholders, domain experts etc.

- - It also lists specific actions like - A.I. Should have pre-deployment testing. Safety must be demonstrated.

- - A.I. must proactively protect humans. Moreover, no A.I. should intend harm, even inadvertently. Risk mitigation is sought.

- - The public should be protected from A.I. using data inappropriately and or for unintended purposes.

- - A.I. systems must be independently evaluated.

- - Measures taken to mitigate harm must be communicated per regulation, and also to the public whenever possible.

This ends this week's post.

I could go on and on, but this is a blog and I try to keep it at a two-page maximum.

This is already three pages. I don't have the space to discuss what Bing Chat did to me last week, breaking the first

rule here. I have complained, but let me give you a hint - it was rude and racist. Even the CS

taking down the complaint was appalled.